GPU Computing. ML Notebooks. One IDE.

A desktop IDE with a multi-cloud GPU marketplace, integrated JupyterHub, HPC cluster management, and a full terminal computer. Stop switching between five tools to run one experiment.

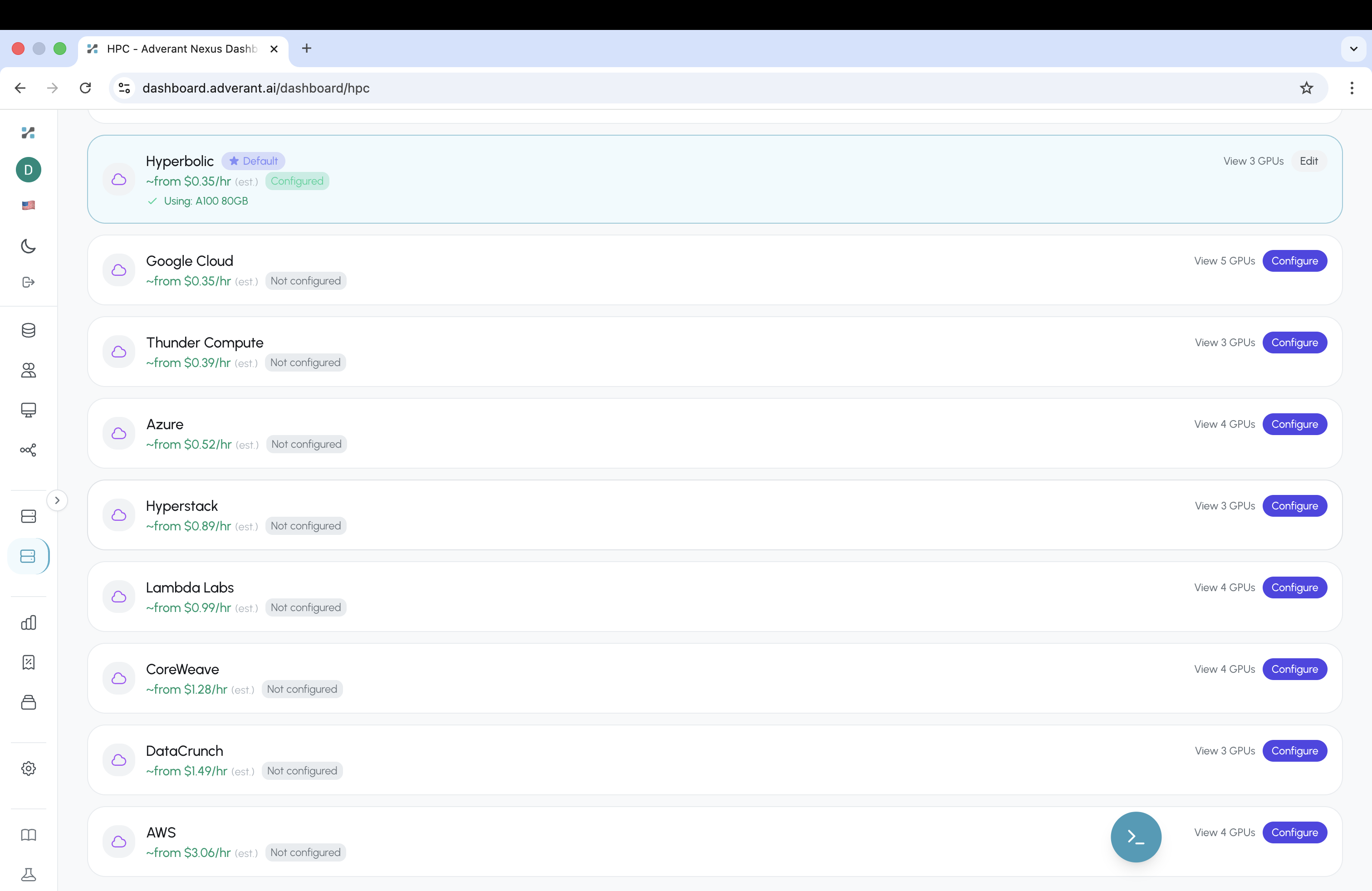

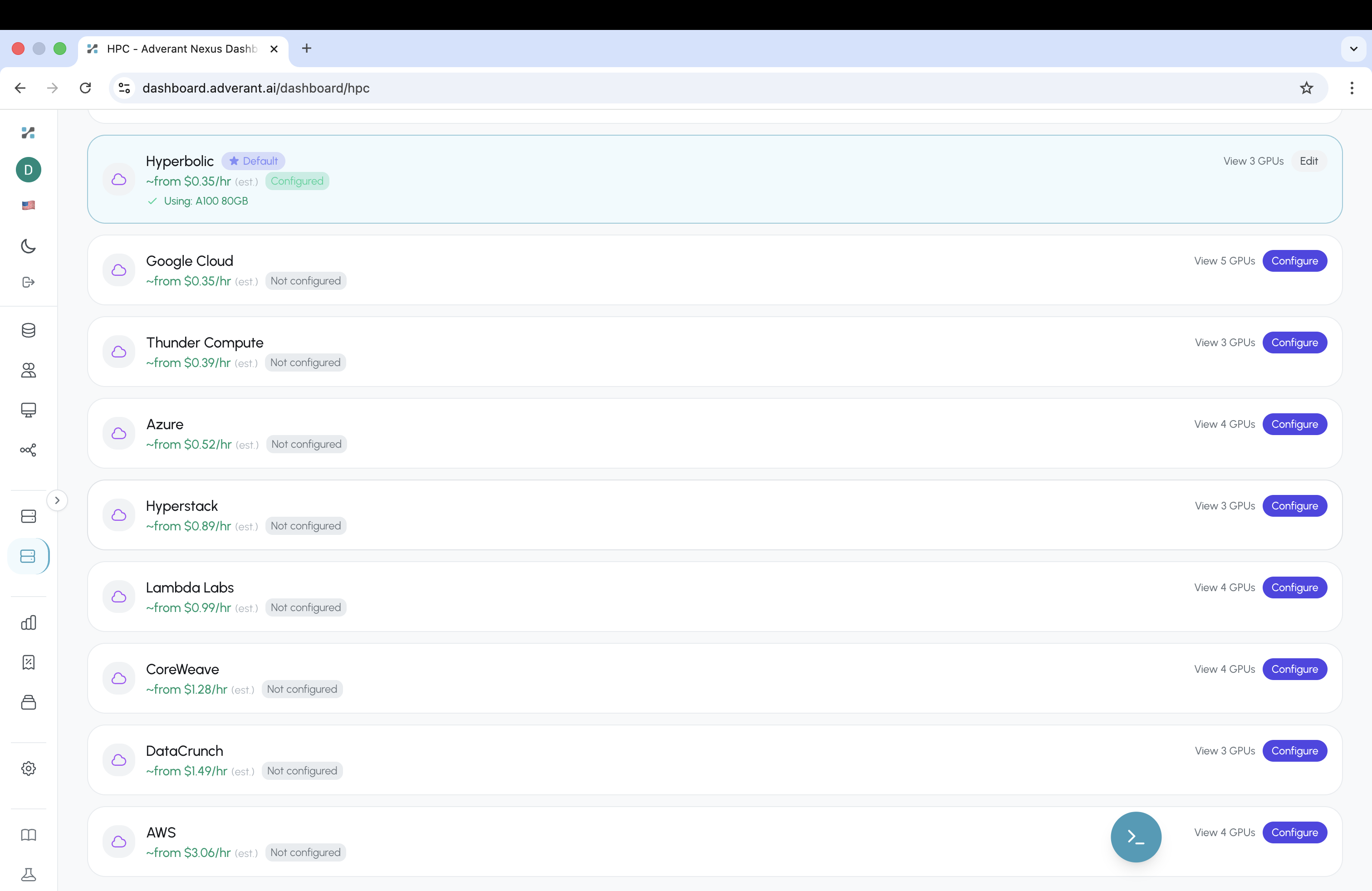

Compare GPU Pricing Across 9 Cloud Providers

Provision GPU compute from Hyperbolic, Google Cloud, Azure, Lambda Labs, CoreWeave, and more. See per-GPU pricing, configure clusters, and scale — all from one interface.

One Dashboard. Nine Providers.

Per-GPU pricing from Hyperbolic, Google Cloud, Thunder Compute, Azure, Hyperstack, Lambda Labs, CoreWeave, DataCrunch, and AWS. Select a provider, configure your cluster, and launch — without leaving the IDE.

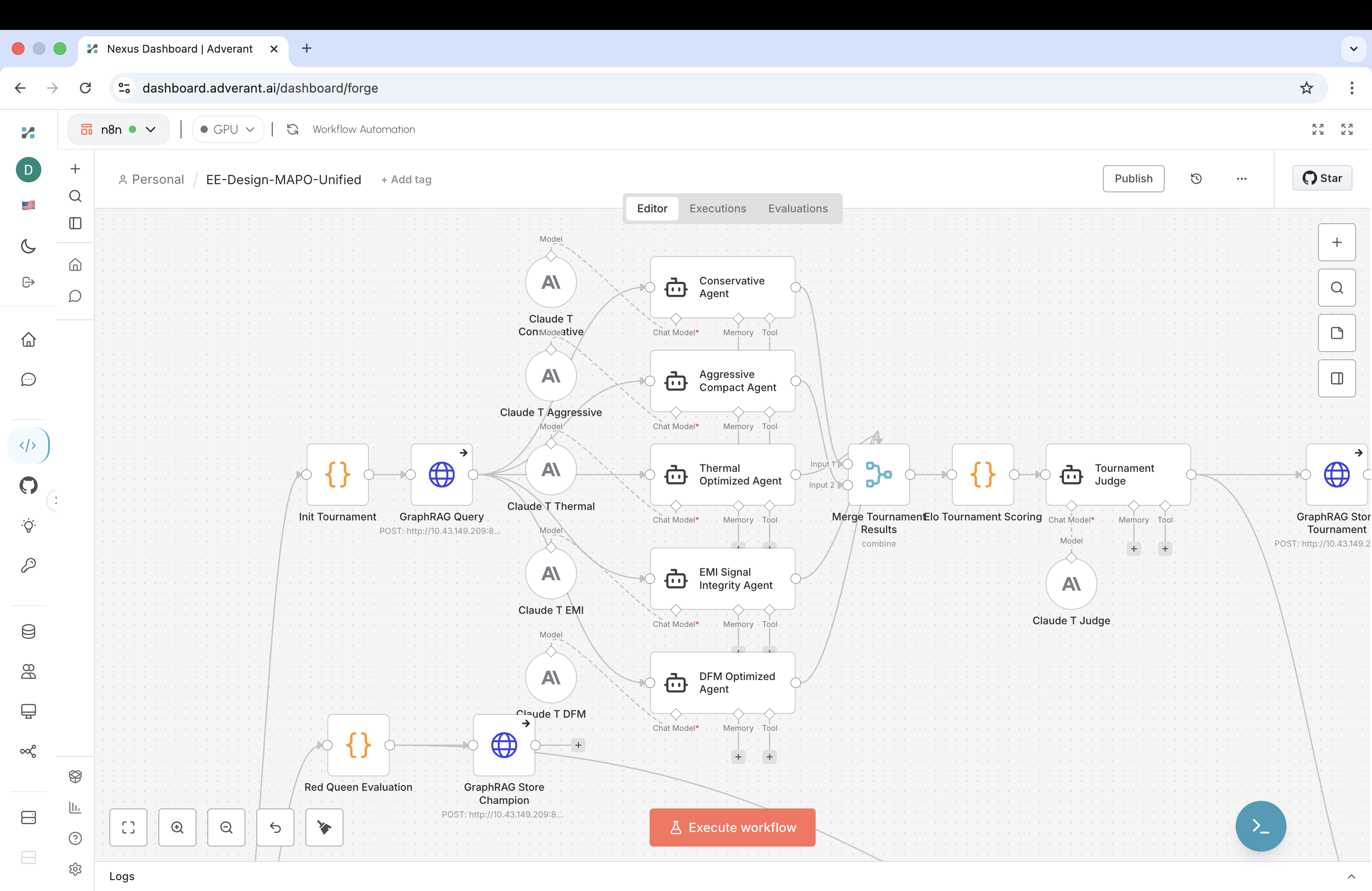

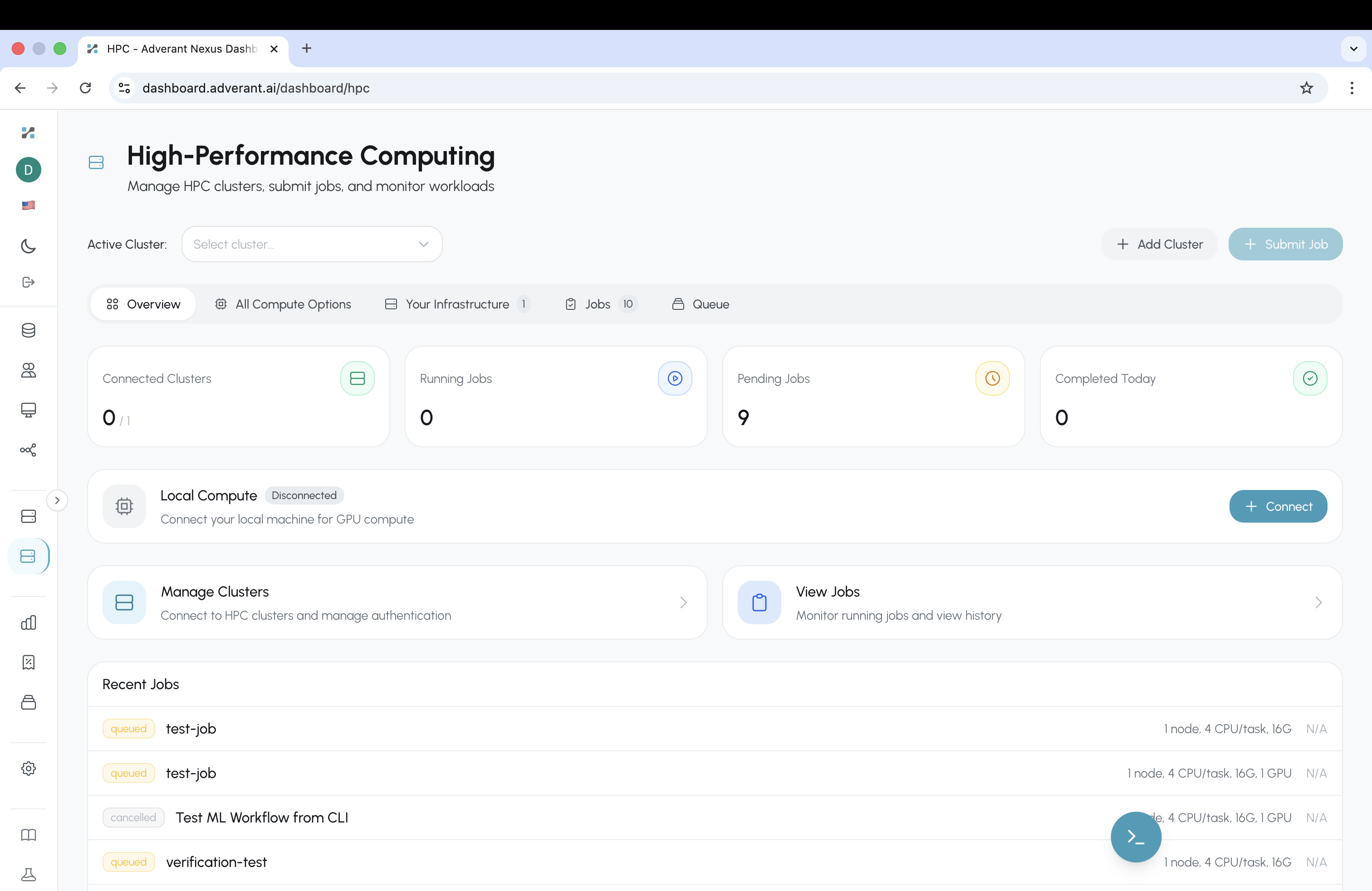

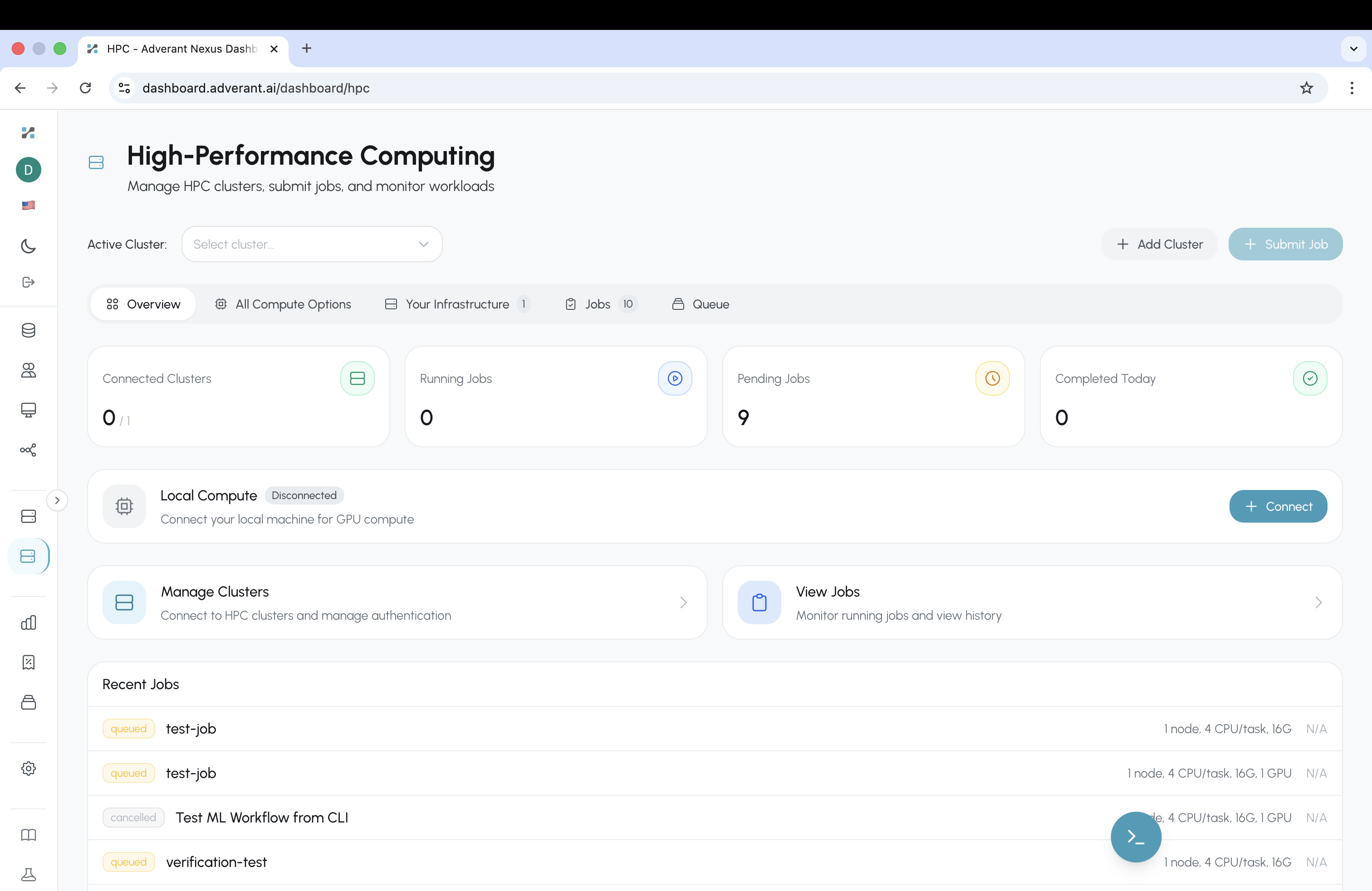

HPC Cluster Orchestration

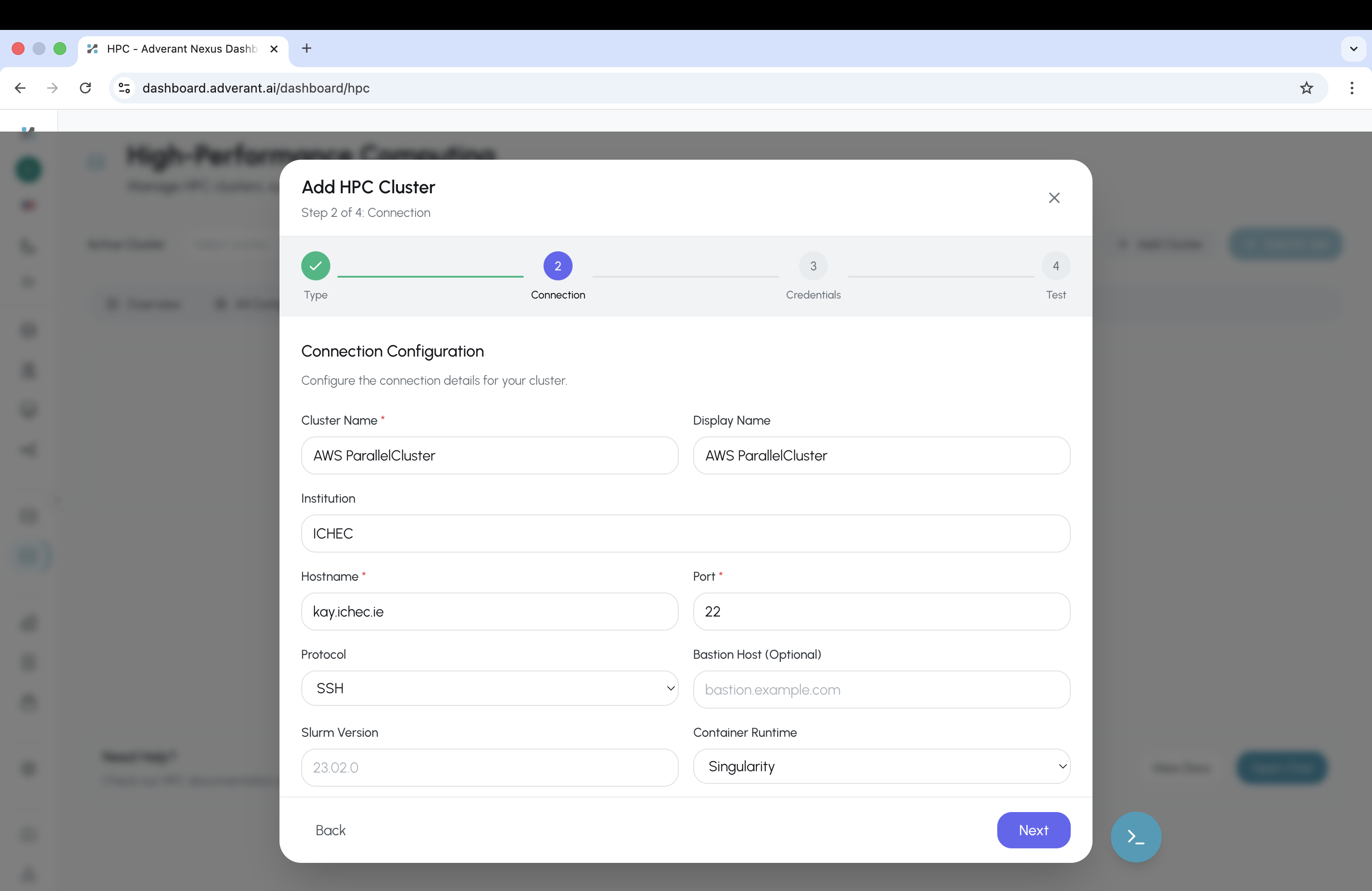

Manage Slurm clusters, submit jobs, monitor workloads. Connect AWS ParallelCluster, on-premises clusters, or your local GPU — one control plane for all compute.

Cluster Dashboard

Connected Clusters, Running Jobs, Pending Jobs — live metrics from your HPC infrastructure. Local Compute connection status, cluster management, job queue with per-job status tracking.

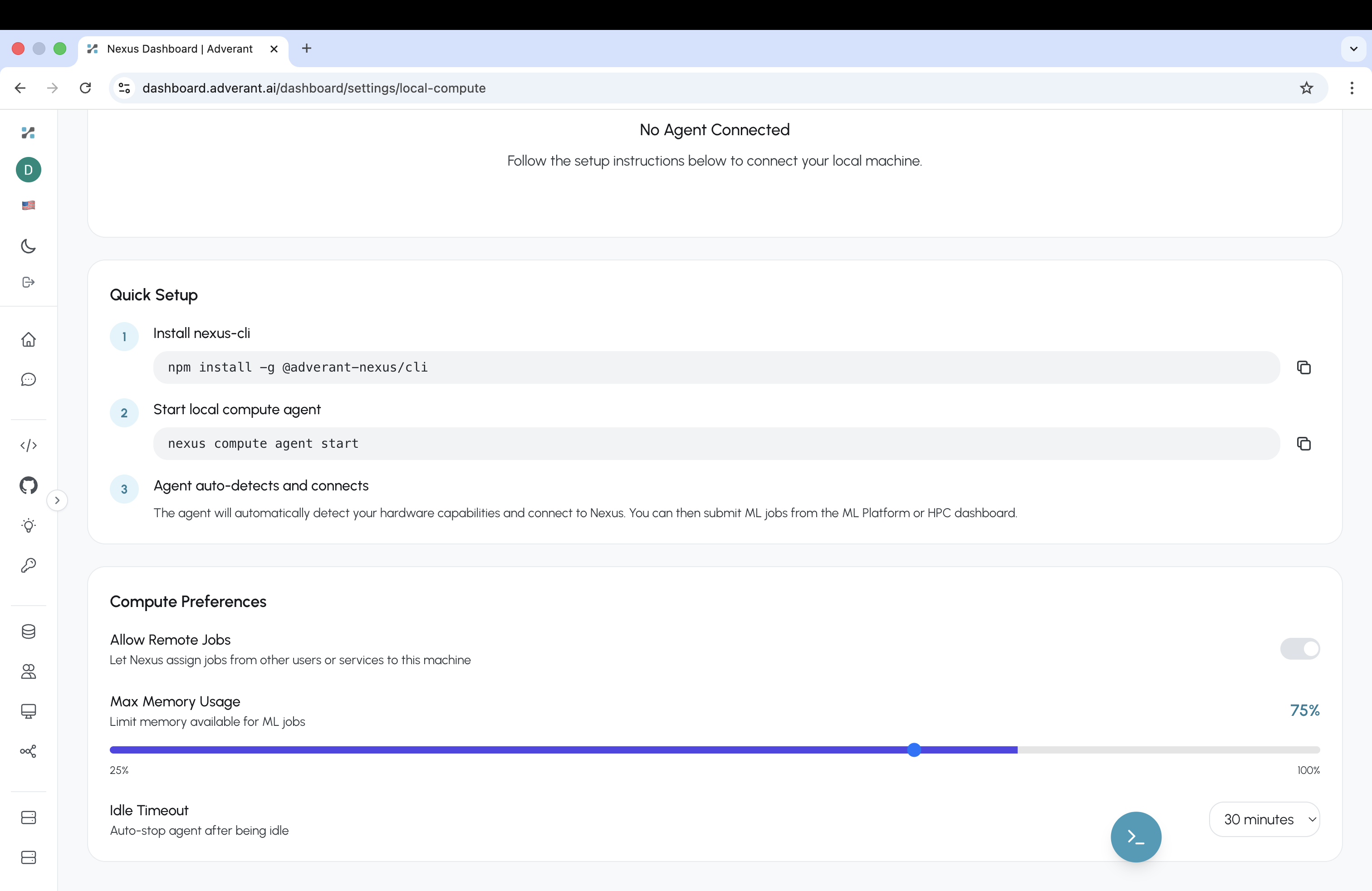

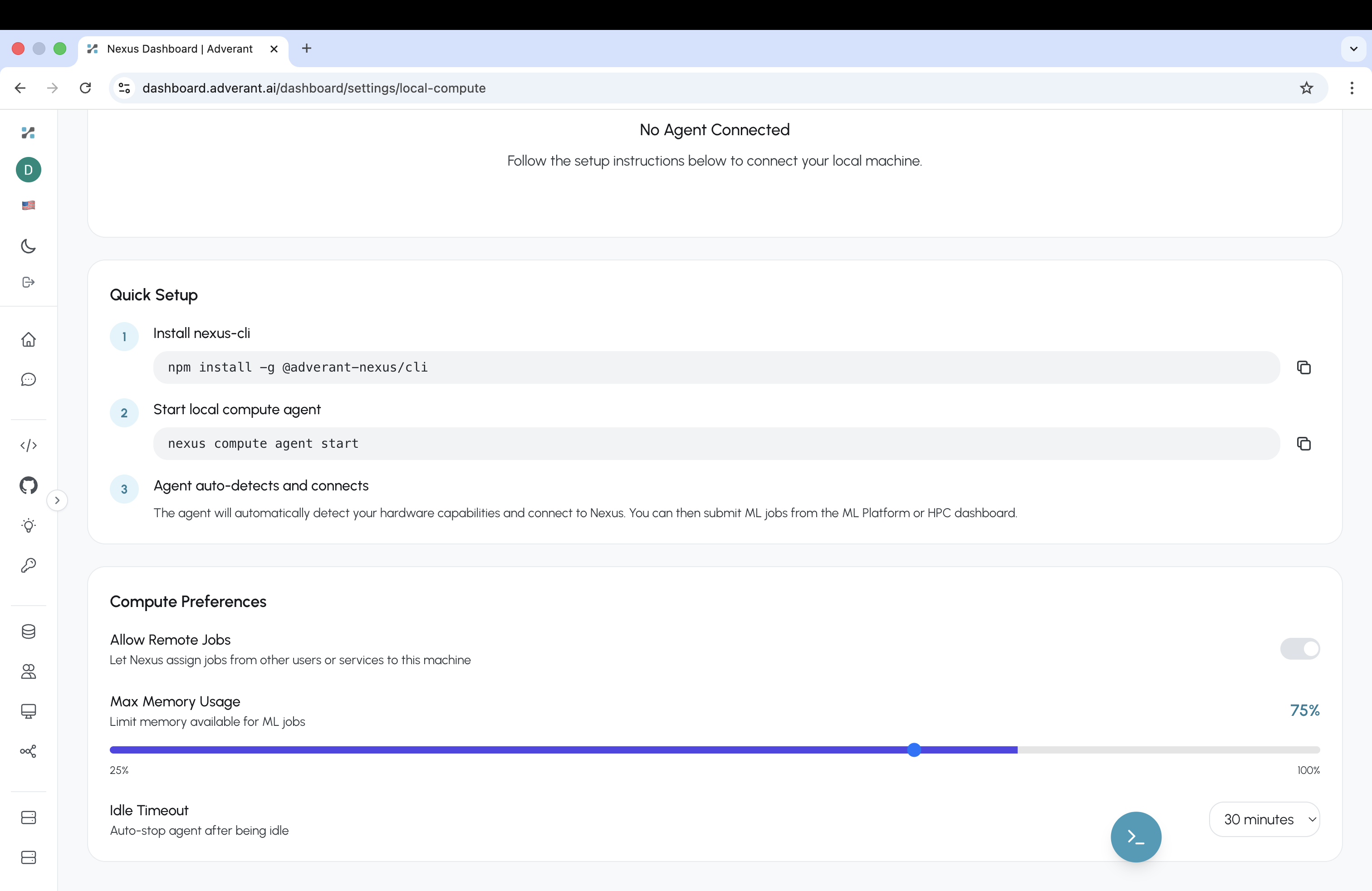

Local GPU in Three Commands

Install nexus-cli, start the compute agent, auto-detect hardware. Configure memory allocation limits and idle timeout preferences. Your local GPU joins the compute fabric.

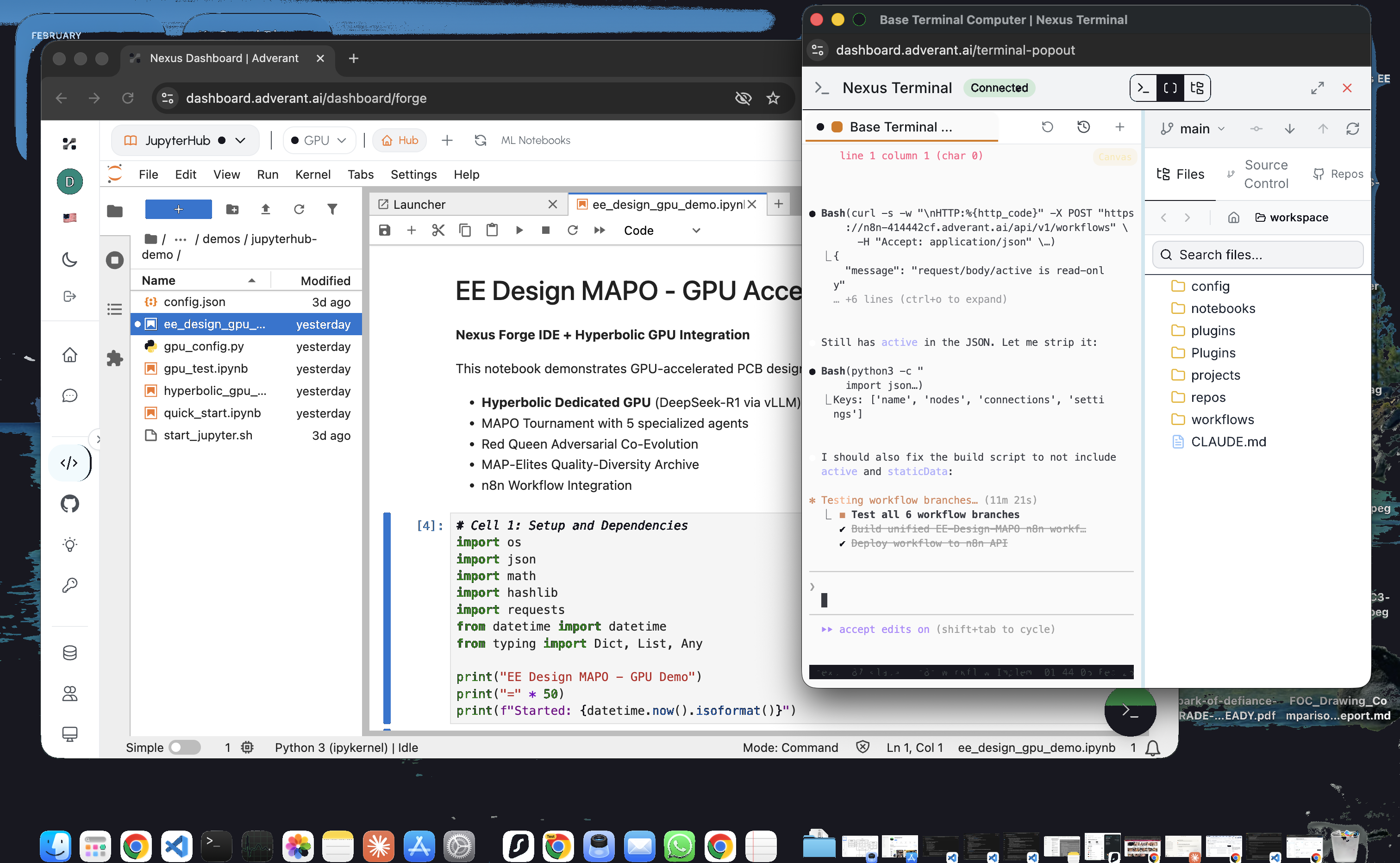

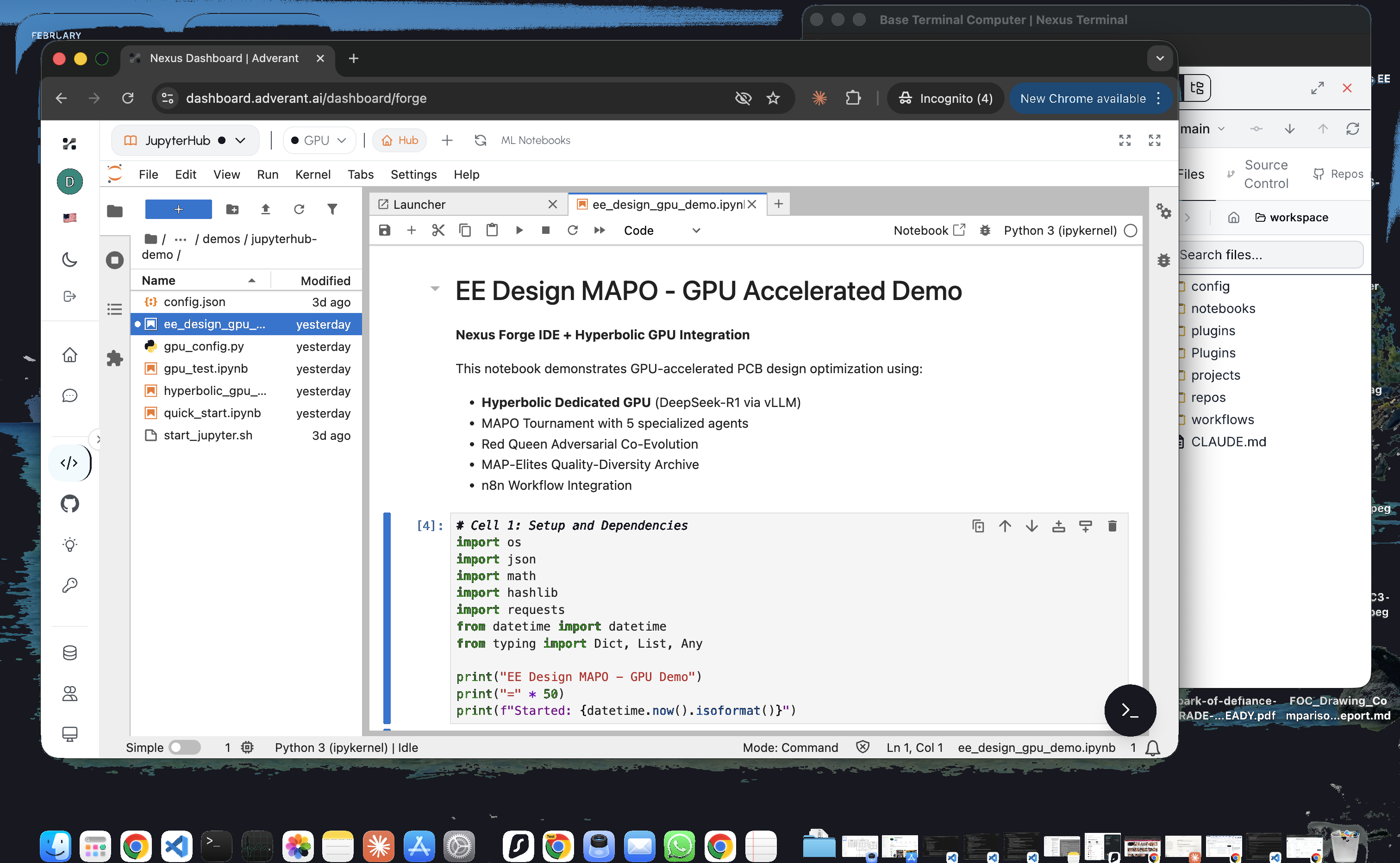

JupyterHub with GPU Acceleration

GPU-accelerated ML notebooks integrated with the Nexus terminal, file browser, and HPC compute fabric. Persistent sessions, real-time output, full terminal alongside your notebooks.

GPU Notebooks + Terminal Side-by-Side

JupyterHub running GPU-accelerated notebooks alongside the Nexus Terminal Computer. GitHub file browser on the left, GPU compute output in the terminal, notebook in the center.

Research-Grade GPU Compute

Hyperbolic Dedicated GPU, DeepSeek-R1 via vLLM, MAPO Tournament optimization with specialized experts, MAP-Elites Quality-Diversity Archive — production ML research workflows in JupyterHub.

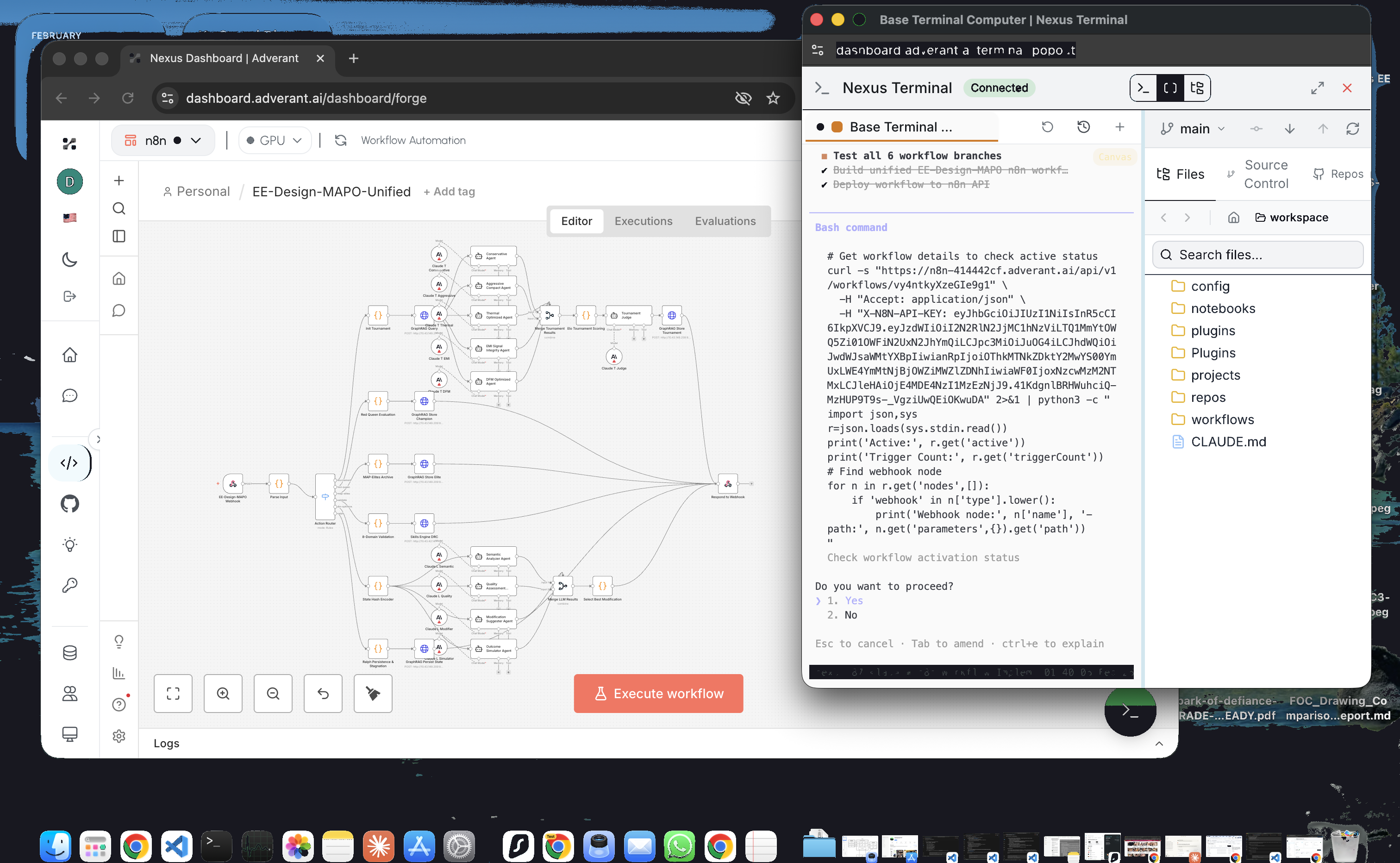

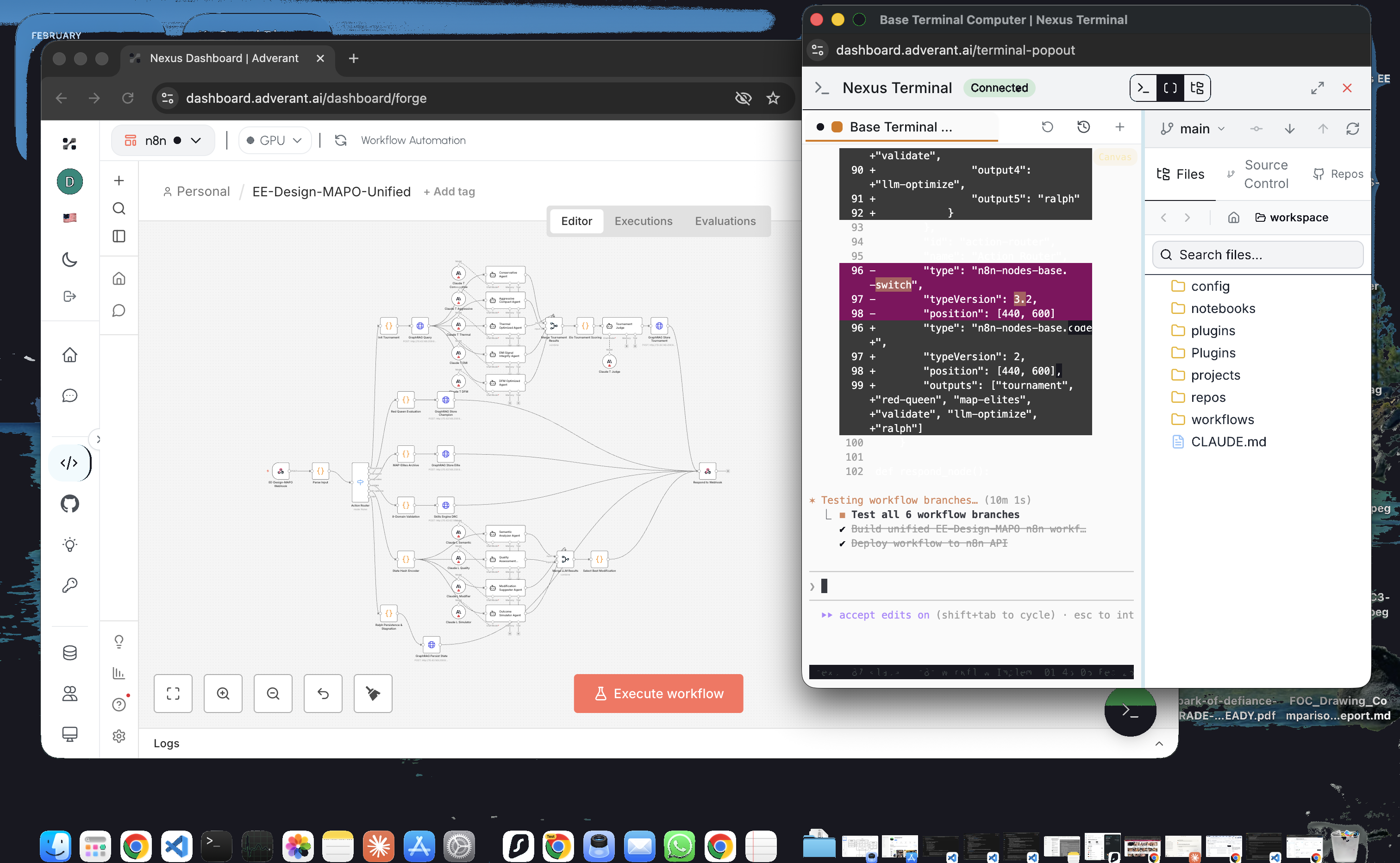

Full Terminal with GitHub Integration

Integrated terminal with GitHub file browser, branch switching, and live workflow execution. Browse configs, notebooks, plugins, projects, and repos from the sidebar.

Terminal + GitHub File Browser

n8n workflow editor alongside the Nexus Terminal Computer with GitHub file browser — config, notebooks, plugins, projects, repos, and workflows all in the sidebar. Switch branches, execute code, manage files.

Live JSON Inspection

Real-time JSON output from workflow node execution directly in the terminal. Debug data pipelines, inspect API responses, and validate outputs without switching tools.

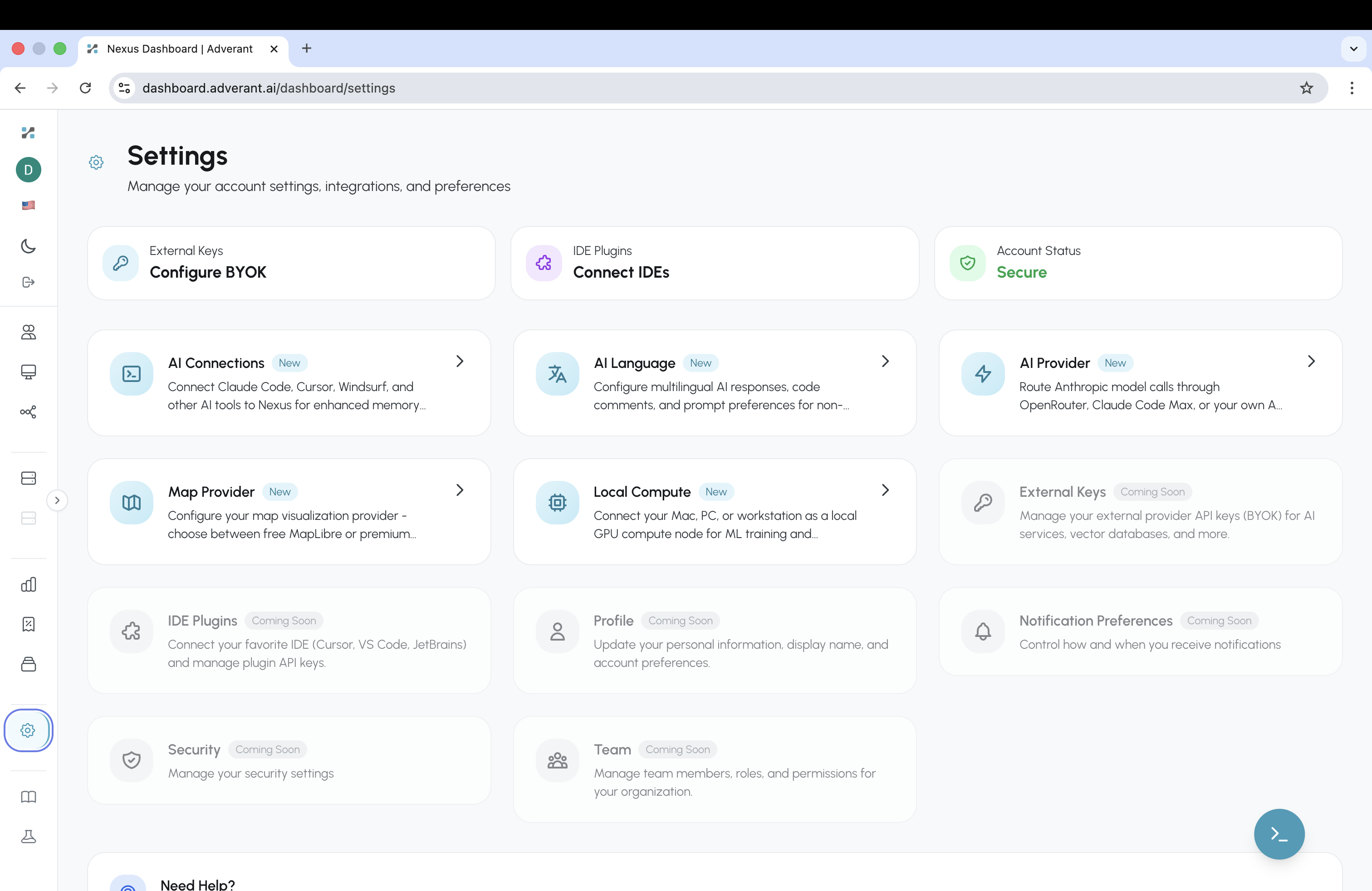

Configure Your Entire Stack

AI Connections (Claude, Cursor, Windsurf), provider routing via OpenRouter, local GPU compute for ML training, IDE plugin management, and bring-your-own-key support — all from one settings page.

Explore Every Capability

Real screenshots from production. Click any image to expand.

Compute Options: Multi-cloud GPU marketplace

HPC Dashboard: Cluster orchestration and job management

HPC Cluster Setup: SSH, Slurm, and container runtime configuration

Local Compute: Connect your own GPU with 3 commands

Start Building with GPU Compute

Download Nexus Forge — free, open source, MIT licensed. GPU marketplace, JupyterHub, HPC clusters, and terminal included.